Although the possibilities of AI are growing exponentially, we should be careful in using these tools. It is important to think critically to decide if using AI is appropriate in your situation.

Using AI for Reference Lists

Have you ever asked ChatGPT to generate a list of publications and other sources?

The fact is – some of them may not even exist!

AI-generated citations are often fully or partially fake (e.g., the author is real but there was no study published in the year indicated).

Read: Why ChatGPT Generates Fake References? | LinkedIn

Read this article by LinkedIn about the tendency of Generative AI to create fake references. If you want to hear more on what people are saying about this, you may also find this twitter thread useful: “Why does chatGPT make up fake academic papers?

Apply Your Thinking:

Practice checking AI Sources

Ask CoPilot to generate a summary of a random topic. This could be anything – for example, a summary of what different keys do on a keyboard. Then check the sources it has listed. Do they all make sense? If so, then great! But try not to get complacent, you should get in the habit of doing this every time you use Generative AI.

Which brings us to our next hazard…

Using AI as your only Source of Information

Remember the section above when we discussed that Generative AI can sometimes produce references that are completely false?

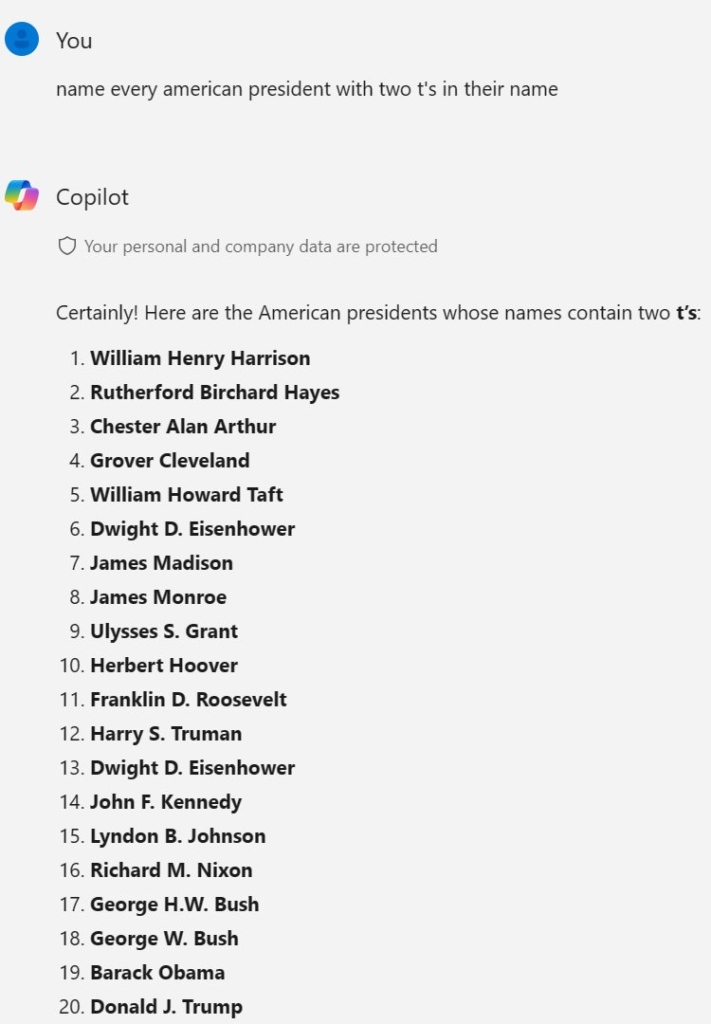

This can happen with any information that these tools produce – in fact, generative AI does this so often people have given this false information a name: AI Hallucinations.

Here is an example:

We will learn more about AI Hallucinations later, but for now, a good rule to follow before using Generative AI is to consider the stakes of your task.

In some situations, it doesn’t matter if the text is wrong. In other cases, you may have the expertise to identify incorrect information and can easily fill in the blanks. However, when a text needs to contain completely accurate information and is on a topic outside your expertise, using Generative AI is unlikely to be appropriate.

Stop and Reflect:

Compare Copilot to Wikipedia

Both offer information that sounds coherent, but it is also prone to errors. Look for an article on a subject you are familiar with on Wikipedia, then search for the same subject using Microsoft Copilot. Which do you think is more reliable? Combine your use of tech with manual fact checking!